| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | ||||

| 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 18 | 19 | 20 | 21 | 22 | 23 | 24 |

| 25 | 26 | 27 | 28 | 29 | 30 | 31 |

- 프로그래머스

- 해시를사용한집합과맵

- 수학

- 그리디

- 사칙연산

- 구현

- 브루트포스알고리즘

- 백준

- 이진탐색

- MySQL

- 자료구조

- 소수판정

- C++

- Image Classification

- 프로그래머스sql

- 그리디알고리즘

- 프로그래머스연습문제

- 큐

- 문자열

- SQL

- 다이나믹프로그래밍

- 논문구현

- 정렬

- 백준알고리즘

- C

- 논문리뷰

- 정수론

- 이분탐색

- 프로그래머스코딩테스트

- C언어

- Today

- Total

초보 개발자의 이야기, 릿허브

[논문구현] VGG16 (Very Deep Convolutional Networks for Large-Scale Image Recognition) 구현 본문

[논문구현] VGG16 (Very Deep Convolutional Networks for Large-Scale Image Recognition) 구현

릿99 2023. 1. 20. 11:55VGG16에 대한 논문 리뷰

https://beginnerdeveloper-lit.tistory.com/157

[논문리뷰] VGG16 (Very Deep Convolutional Networks for Large-Scale Image Recognition)

VGG16 https://arxiv.org/abs/1409.1556 Very Deep Convolutional Networks for Large-Scale Image Recognition In this work we investigate the effect of the convolutional network depth on its accuracy in the large-scale image recognition setting. Our main contri

beginnerdeveloper-lit.tistory.com

VGG16

저번에 리뷰한 "Very Deep Convolutional Networks for Large-Scale Image Recognition" 논문의

VGG16 네트워크를 구현해보고자 한다.

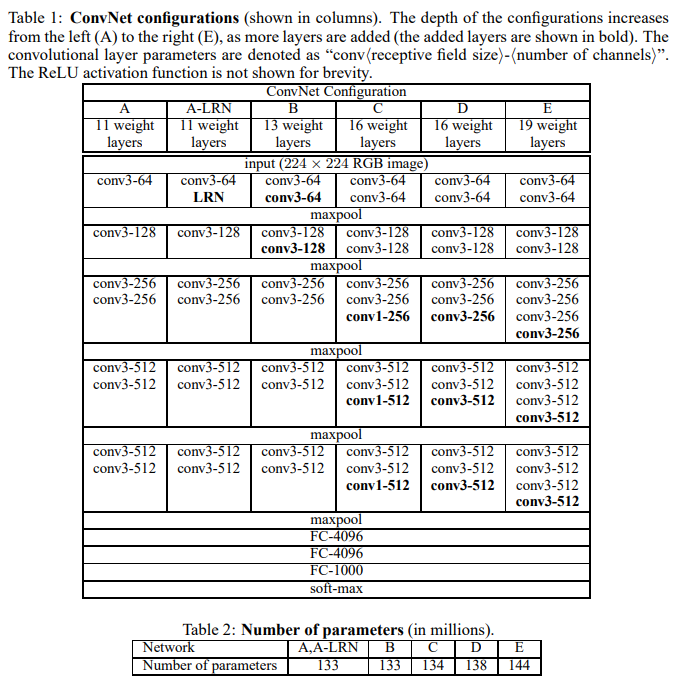

VGG16의 구조는 Table 1의 D와 같으며, 자세한 그림으로 살펴보면 아래와 같다.

VGG16의 16은 16-Layer, 13개의 Convolution Layer + 3개의 Fully-Connected Layer를 의미한다.

224 x 224 x 3 (RGB) 이미지를 input으로 받아 위와 같은 과정을 걸쳐 인식하게 된다.

자세한 내용은 상단 링크의 VGG16 논문 리뷰 글을 참고하자.

Environment & Parameter

❗ 해당 논문의 VGG16 모델의 구조에 초점을 맞춰 구현하였으며,

그 외 세부적인 사항까지 완벽하게 구현하지는 못했습니다.

(image crop, single/multi scale evaluation 등)

❗ 또한, 논문에 사용된 Dataset과 다른 Dataset을 사용했으므로,

Parameter들 또한 상이하다는 점 양해 부탁드립니다.

실험에 사용한 환경은 아래와 같습니다.

Language : Python

Framework : Tensorflow (GPU)

Dataset : Kaggle Dog & Cat 중 일부 사용 (train : Dog 5000, Cat 5000 / validation : Dog 2000, Cat 2000)

(https://www.kaggle.com/datasets/tongpython/cat-and-dog?select=training_set)

(Dataset 중 일부 훼손된 이미지가 있어, 해당 이미지들 필수 삭제 후 훈련 필요)

Image Size : 224 x 224 x 3

Batch Size : 32

Epoch : 50

VGG16 Code

<VGG16 Model Code>

'''

< VGG16 model Architecture>

- 13 convolution Layers + 3 fully-connected Layers

- 3x3 convolution filter, stride = 1

- 2x2 max pooling

- ReLU

'''

def VGG16():

tf.set_random_seed(2)

model = tf.keras.models.Sequential([

# input = 224 x 224 x 3

# 224 x 224 x 64

layers.Conv2D(64, (3, 3), strides=1, padding='same', activation='relu', input_shape=(224, 224, 3)),

layers.Conv2D(64, (3, 3), strides=1, padding='same', activation='relu'),

# 112 x 112 x 64

layers.MaxPool2D((2, 2), padding='same'),

# 112 x 112 x 128

layers.Conv2D(128, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(128, (3, 3), strides=1, padding='same', activation='relu'),

# 56 x 56 x 128

layers.MaxPool2D((2, 2), padding='same'),

# 56 x 56 x 256

layers.Conv2D(256, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(256, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(256, (3, 3), strides=1, padding='same', activation='relu'),

# 28 x 28 x 256

layers.MaxPool2D((2, 2), padding='same'),

# 28 x 28 x 512

layers.Conv2D(512, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(512, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(512, (3, 3), strides=1, padding='same', activation='relu'),

# 14 x 14 x 512

layers.MaxPool2D((2, 2), padding='same'),

# 14 x 14 x 512

layers.Conv2D(512, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(512, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(512, (3, 3), strides=1, padding='same', activation='relu'),

# 7 x 7 x 512

layers.MaxPool2D((2, 2), padding='same'),

# 1 x 1 x 25088

layers.Flatten(),

layers.Dropout(0.5),

# 1 x 1 x 4096

layers.Dense(4096, activation='relu'),

# 1 x 1 x 4096

layers.Dense(4096, activation='relu'),

# 1 x 1 x 1000

layers.Dense(1000, activation='relu'),

# 1 x 1 x 2

layers.Dense(2, activation='softmax'),

])

model.compile(optimizer=tf.keras.optimizers.SGD(learning_rate=0.01),

loss='categorical_crossentropy',

metrics=['acc'])

return model

<Entire Code>

import tensorflow as tf

from tensorflow.keras import layers

from tensorflow.keras.preprocessing.image import ImageDataGenerator

import os

import numpy as np

import cv2

import matplotlib.pyplot as plt

from tensorflow.python.client import device_lib

print(device_lib.list_local_devices())

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

tf.test.is_gpu_available()

gpus = tf.config.experimental.list_physical_devices('GPU')

if gpus:

try:

# Currently, memory growth needs to be the same across GPUs

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpus[0], True)

except RuntimeError as e:

# Memory growth must be set before GPUs have been initialized

print(e)

'''

< VGG16 model Architecture>

- 13 convolution Layers + 3 fully-connected Layers

- 3x3 convolution filter, stride = 1

- 2x2 max pooling

- ReLU

'''

def VGG16():

tf.set_random_seed(2)

model = tf.keras.models.Sequential([

# input = 224 x 224 x 3

# 224 x 224 x 64

layers.Conv2D(64, (3, 3), strides=1, padding='same', activation='relu', input_shape=(224, 224, 3)),

layers.Conv2D(64, (3, 3), strides=1, padding='same', activation='relu'),

# 112 x 112 x 64

layers.MaxPool2D((2, 2), padding='same'),

# 112 x 112 x 128

layers.Conv2D(128, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(128, (3, 3), strides=1, padding='same', activation='relu'),

# 56 x 56 x 128

layers.MaxPool2D((2, 2), padding='same'),

# 56 x 56 x 256

layers.Conv2D(256, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(256, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(256, (3, 3), strides=1, padding='same', activation='relu'),

# 28 x 28 x 256

layers.MaxPool2D((2, 2), padding='same'),

# 28 x 28 x 512

layers.Conv2D(512, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(512, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(512, (3, 3), strides=1, padding='same', activation='relu'),

# 14 x 14 x 512

layers.MaxPool2D((2, 2), padding='same'),

# 14 x 14 x 512

layers.Conv2D(512, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(512, (3, 3), strides=1, padding='same', activation='relu'),

layers.Conv2D(512, (3, 3), strides=1, padding='same', activation='relu'),

# 7 x 7 x 512

layers.MaxPool2D((2, 2), padding='same'),

# 1 x 1 x 25088

layers.Flatten(),

layers.Dropout(0.5),

# 1 x 1 x 4096

layers.Dense(4096, activation='relu'),

# 1 x 1 x 4096

layers.Dense(4096, activation='relu'),

# 1 x 1 x 1000

layers.Dense(1000, activation='relu'),

# 1 x 1 x 2

layers.Dense(2, activation='softmax'),

])

model.compile(optimizer=tf.keras.optimizers.SGD(learning_rate=0.01),

loss='categorical_crossentropy',

metrics=['acc'])

return model

# Dataset (Kaggle Cat and Dog Dataset)

dataset_path = os.path.join('/home/kellybjs/Cat_Dog_Dataset')

train_dataset_path = dataset_path + '/train_set'

train_data_generator = ImageDataGenerator(rescale=1. / 255)

train_dataset = train_data_generator.flow_from_directory(train_dataset_path,

shuffle=True,

target_size=(224, 224),

batch_size=32,

class_mode='categorical')

valid_dataset_path = dataset_path + '/validation_set'

valid_data_generator = ImageDataGenerator(rescale=1. / 255)

valid_dataset = valid_data_generator.flow_from_directory(valid_dataset_path,

shuffle=True,

target_size=(224, 224),

batch_size=32,

class_mode='categorical')

# Train

print("Start Train!")

model = VGG16()

model.summary()

train = model.fit_generator(train_dataset, epochs=50, validation_data=valid_dataset)

# Accuracy graph

plt.figure(1)

plt.plot(train.history['acc'])

plt.plot(train.history['val_acc'])

plt.title('Accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'validation'], loc='upper left')

plt.savefig('VGG16_Accuracy_1.png')

print("Saved Accuracy graph")

# Loss graph

plt.figure(2)

plt.plot(train.history['loss'])

plt.plot(train.history['val_loss'])

plt.title('Loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'validation'], loc='upper left')

plt.savefig('VGG16_Loss_1.png')

print("Saved Loss graph")

model.save('VGG16.h5')

Result

위 코드를 적용한 Train 및 Validation Accuracy 결과이다.

Train 시에는 최대 약 90%, Validation 시에는 약 80%의 정확도가 나오는 것을 볼 수 있다.

추후 다룰테지만, Fine Training 시 최대 99%정도의 정확도가 도출될 정도로 우수한 성능을 보인다.

위 코드를 적용한 Train 및 Validation Loss 결과이다.

두 그래프 모두 점차 Loss 가 줄어드는 것이 보이나, Validation의 경우 후반에 많이 진동하는 점이 아쉽다.

이 또한 Fine Training 시 0%에 가까운 Loss가 도출되며, 보다 우수한 성능을 보인다.

'논문 > 💻 논문 구현' 카테고리의 다른 글

| 3D point cloud landmark detection 논문 관련 백업 및 정리 (0) | 2025.12.18 |

|---|---|

| [논문구현] DenseNet (Densely Connected Convolutional Networks) 구현 (0) | 2023.01.27 |

| [논문구현] ResNet (Deep Residual Learning for Image Recognition) 구현 (0) | 2023.01.26 |