| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | ||||

| 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 18 | 19 | 20 | 21 | 22 | 23 | 24 |

| 25 | 26 | 27 | 28 | 29 | 30 | 31 |

- 프로그래머스코딩테스트

- 프로그래머스

- Image Classification

- 논문구현

- 브루트포스알고리즘

- 자료구조

- 이분탐색

- 프로그래머스연습문제

- SQL

- MySQL

- 문자열

- C

- 논문리뷰

- 프로그래머스sql

- 소수판정

- 수학

- 백준알고리즘

- 다이나믹프로그래밍

- C언어

- 그리디알고리즘

- 정수론

- 구현

- 해시를사용한집합과맵

- 사칙연산

- 정렬

- 백준

- 큐

- C++

- 그리디

- 이진탐색

- Today

- Total

초보 개발자의 이야기, 릿허브

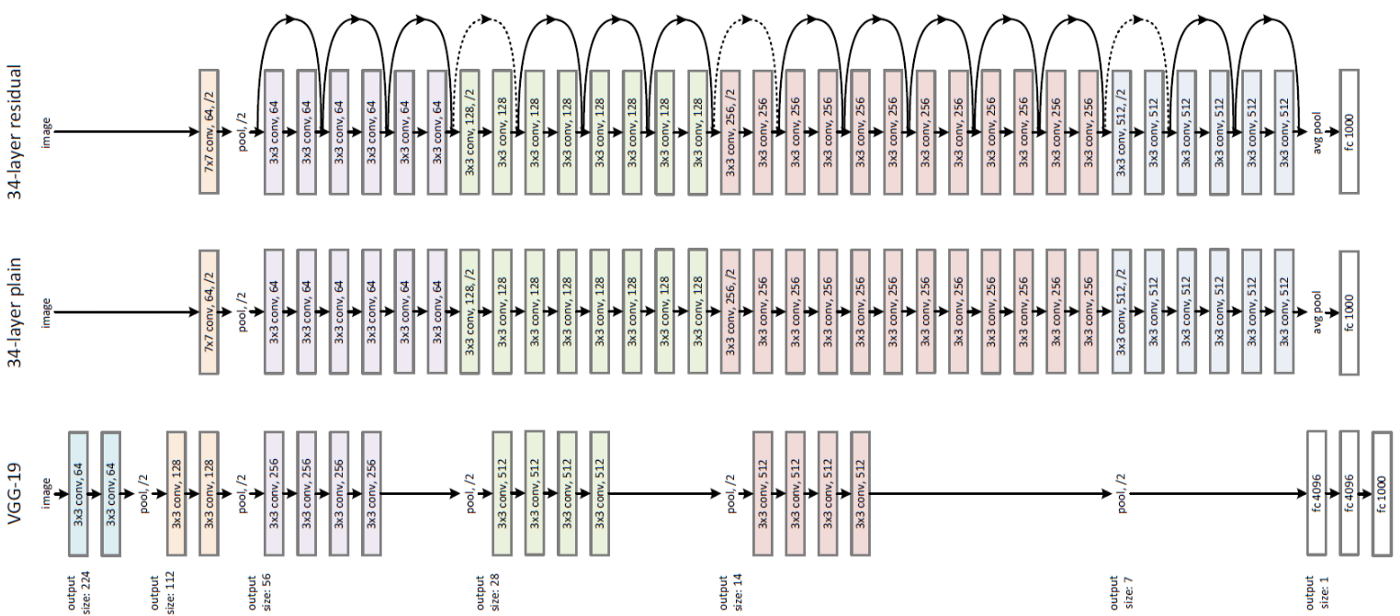

[논문구현] ResNet (Deep Residual Learning for Image Recognition) 구현 본문

ResNet에 대한 논문 리뷰

https://beginnerdeveloper-lit.tistory.com/159

[논문리뷰] ResNet (Deep Residual Learning for Image Recognition)

ResNet https://arxiv.org/abs/1512.03385 Deep Residual Learning for Image Recognition Deeper neural networks are more difficult to train. We present a residual learning framework to ease the training of networks that are substantially deeper than those used

beginnerdeveloper-lit.tistory.com

ResNet50

지난번 리뷰한 "Deep Residual Learning for Image Recognition" 논문의

ResNet 네트워크 중 ResNet50을 구현해보고자 한다.

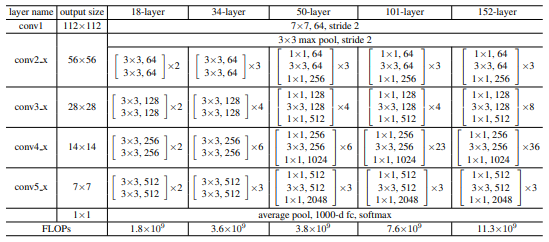

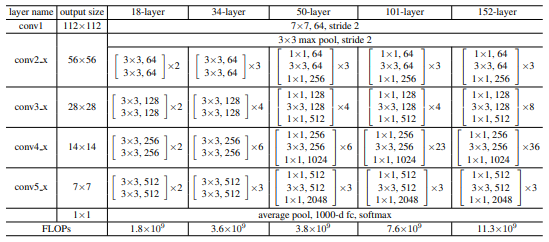

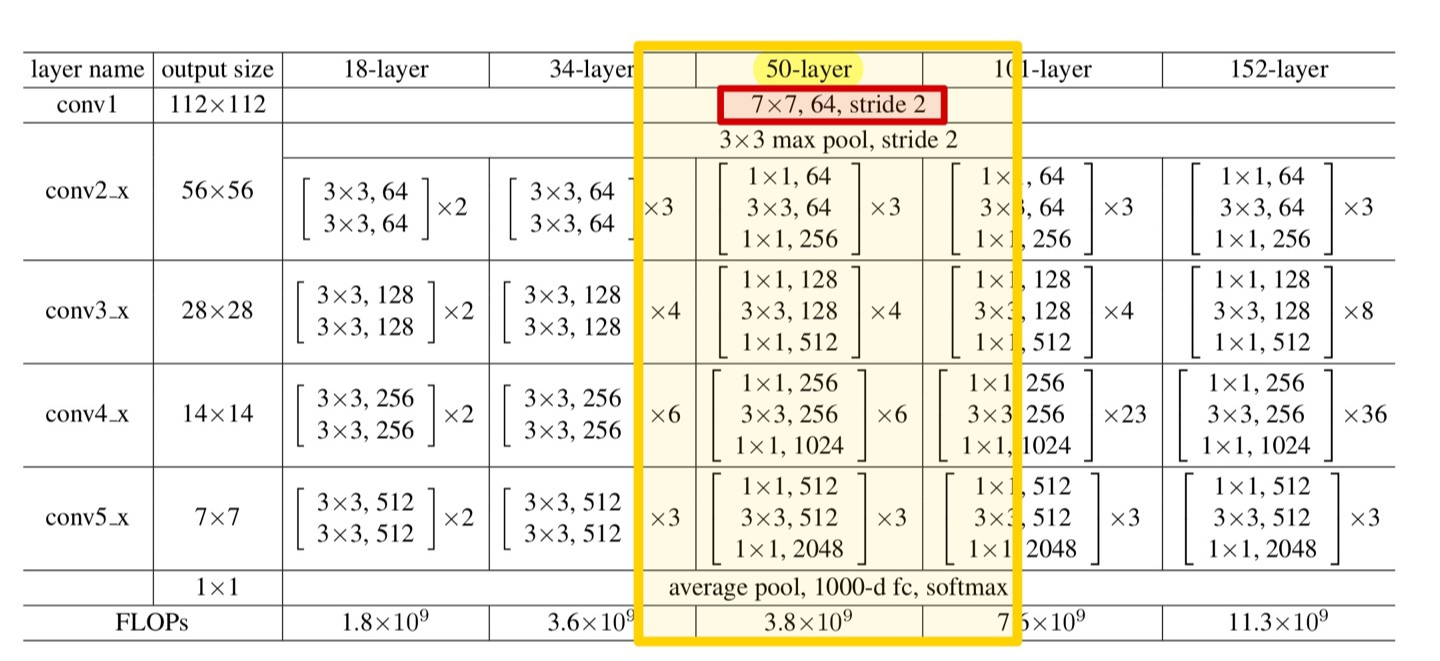

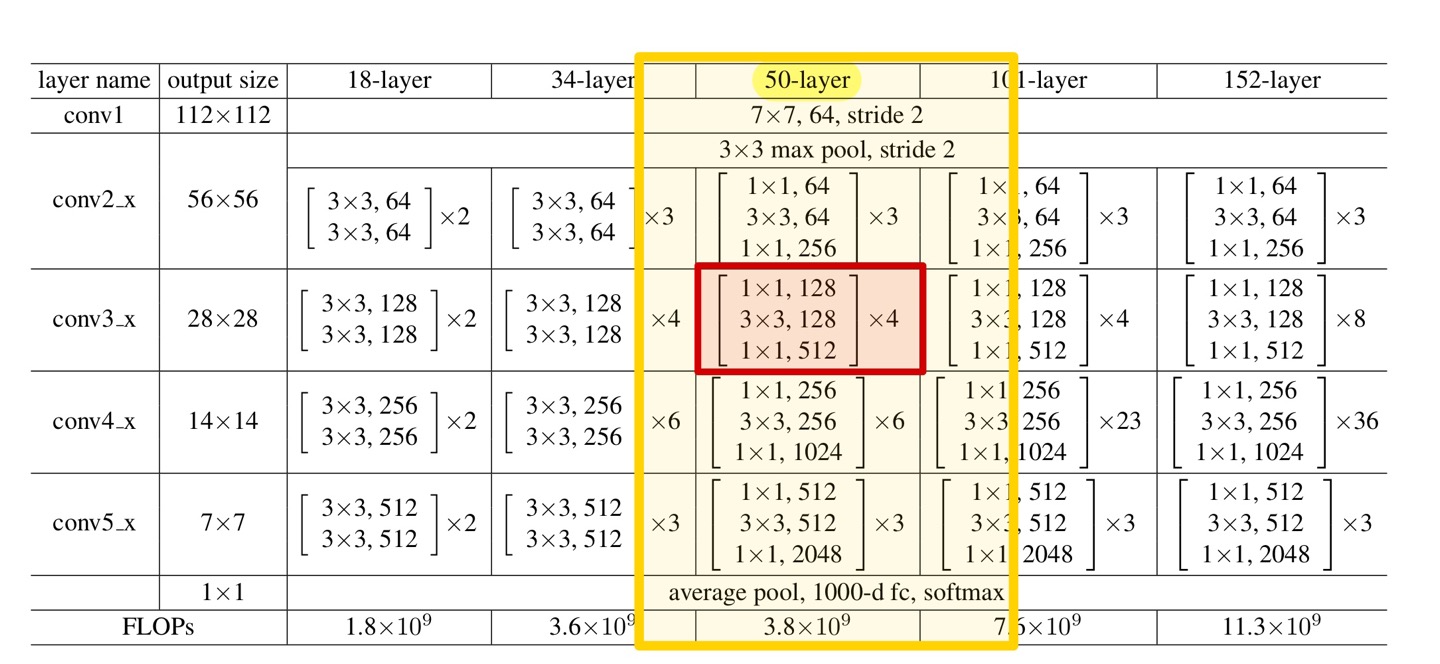

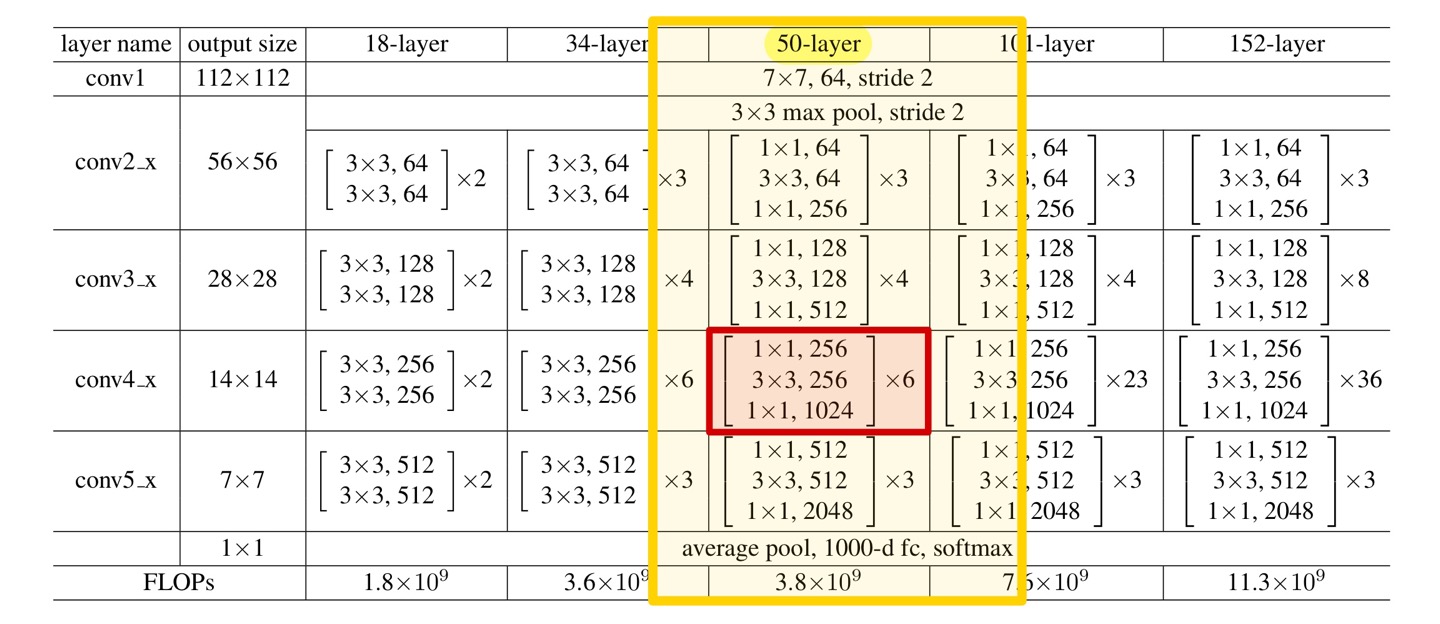

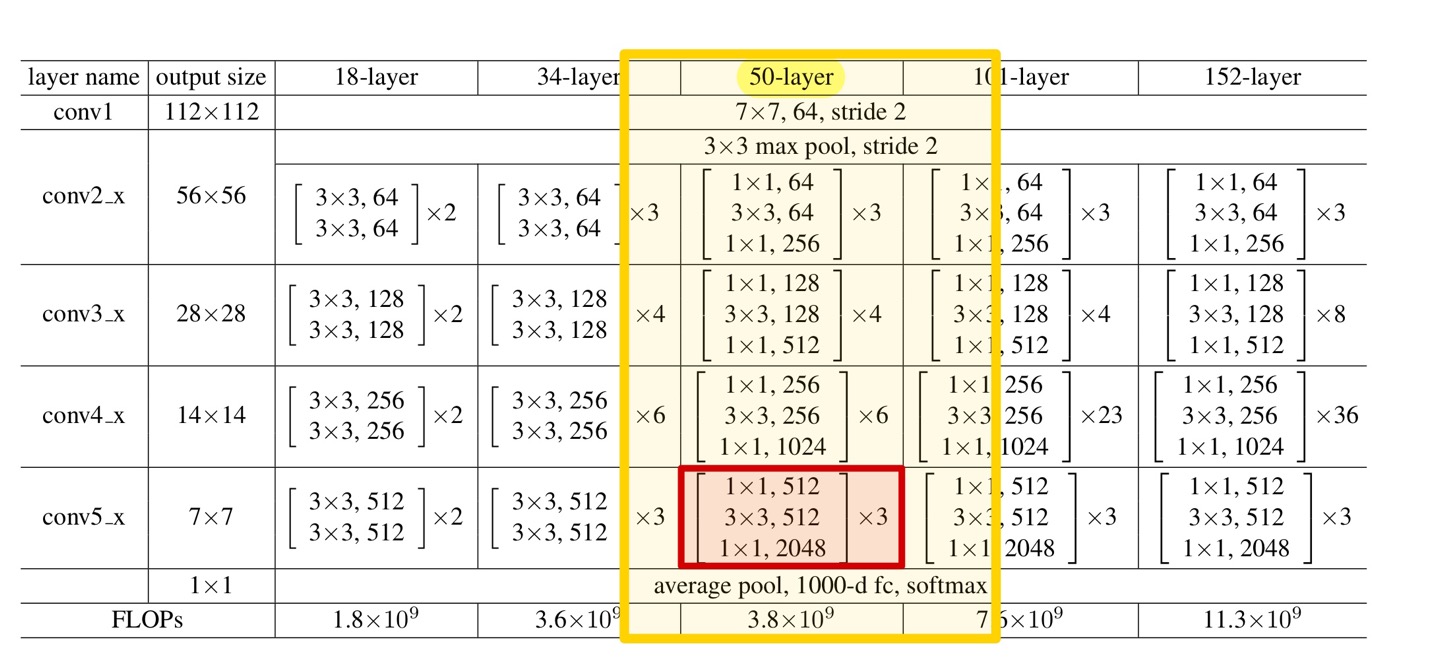

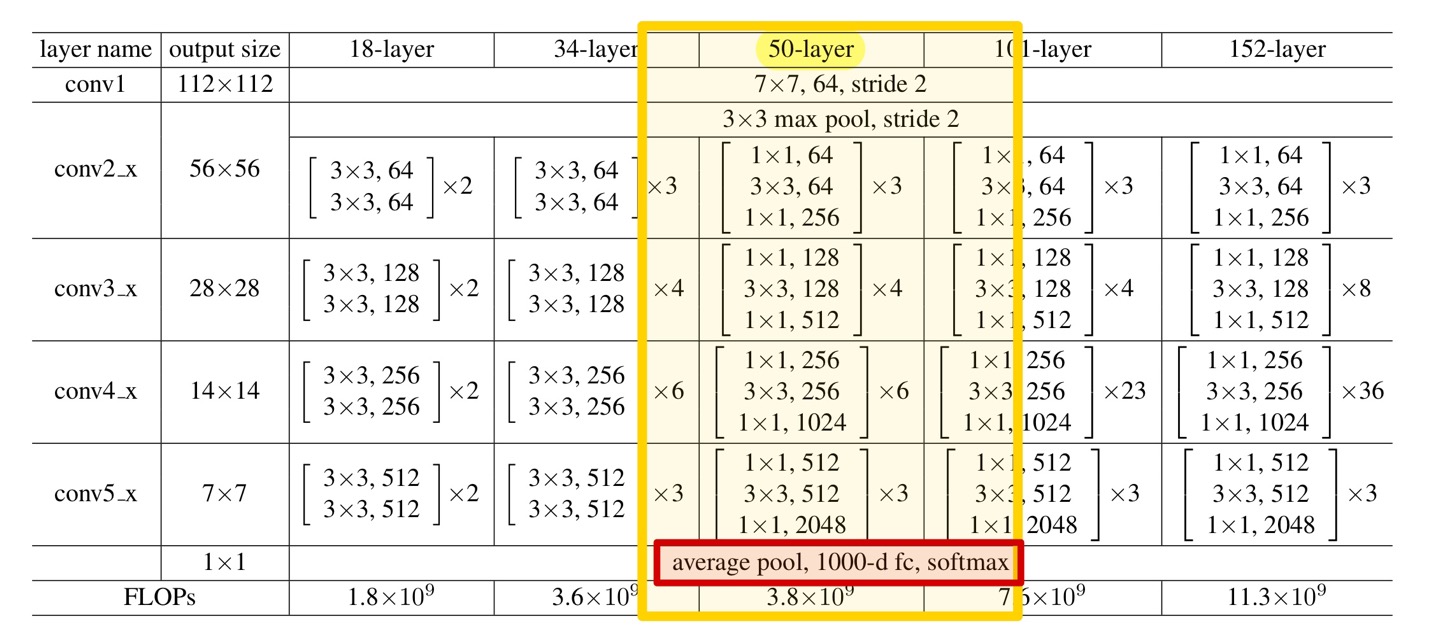

ResNet50의 자세한 구조는 아래 Table을 참고하자.

논문에서 주로 소개 및 비교하는 모델은 34-Layer지만, 필자는 50-Layer를 구현했다.

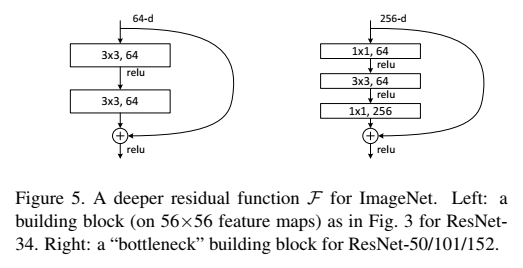

34-Layer 모델과의 차이점은 각 Convolution Layer의 앞 뒤로 1 x 1 convolution이 추가된 것인데,

이는 저번 논문 리뷰에서도 언급한 Bottleneck 구조이다.

Bottleneck 구조란 말 그대로 "병목 구조"인데,

다음과 같이 차원이 줄었다가 늘어나는 현상을 병목 현상이라 한다.

ResNet에서 이야기하는 BottleNeck 구조 또한 이와 동일하다.

여기서 1 x 1 convolution은 Dimension을 줄였다가 키우는 역할을 하는데,

이는 3x3 Layer의 Input/Output Dimension을 줄이기 위해서이다.

Environment & Parameter

❗ 해당 논문의 ResNet50 모델의 구조에 초점을 맞춰 구현하였으며,

그 외 세부적인 사항까지 완벽하게 구현하지는 못했습니다.

(image crop, mean subtract 등)

❗ 또한, 논문에 사용된 Dataset과 다른 Dataset을 사용했으므로,

Parameter들 또한 상이하다는 점 양해 부탁드립니다.

실험에 사용한 환경은 아래와 같습니다.

Language : Python

Framework : Tensorflow (GPU)

Dataset : Kaggle Dog & Cat 중 일부 사용 (train : Dog 5000, Cat 5000 / validation : Dog 2000, Cat 2000)

(https://www.kaggle.com/datasets/tongpython/cat-and-dog?select=training_set)

(Dataset 중 일부 훼손된 이미지가 있어, 해당 이미지들 필수 삭제 후 훈련 필요)

Image Size : 224 x 224 x 3

Batch Size : 32

Epoch : 50

Learning Rate : 0.001 (momentum = 0.9)

ResNet50 Code

<ResNet50 Layers Code>

코드를 보면서 ResNet50 Model의 각 Layer들을 하나씩 살펴보도록 하자.

먼저 다시 50-Layer ResNet의 구조를 보면 아래 Table과 같다.

[ Conv 1 ]

첫번째 Convolution Layer부터 살펴보자.

이미지 input size는 224 x 224로, 64개의 filter, kernel size = 7 x 7, stride = 2를 사용한다.

stride = 2를 사용함으로서, output size = 112 x 112 가 된다.

ResNet 논문의 implementation에서

각 convolution 연산 뒤, activation 전에 Batch Normalization을 수행했기에 필자도 동일하게 구현했다.

# input = 224 x 224 x 3

# Conv1 -> 1

x = layers.Conv2D(64, (7, 7), strides=2, padding='same', input_shape=(224, 224, 3))(x) # 112x112x64

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

[ Conv 2 ]

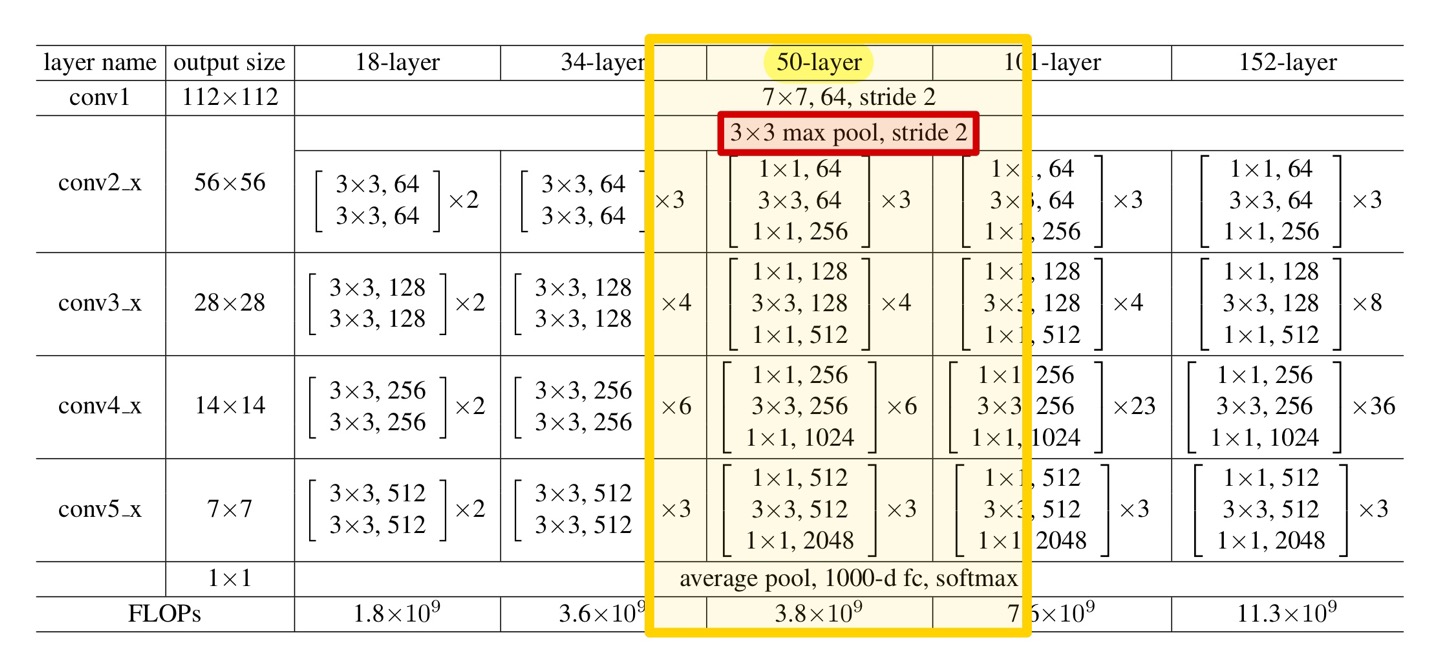

두번째 Convolution Layer이다.

두번째 Convolution 연산을 시작하기 전, 먼저 3 x 3 maxpooling(stride = 2)을 적용한다.

maxpooling 이후 output size는 56 x 56 이다.

x = layers.MaxPool2D((3, 3), 2, padding='same')(x) # 56x56x64

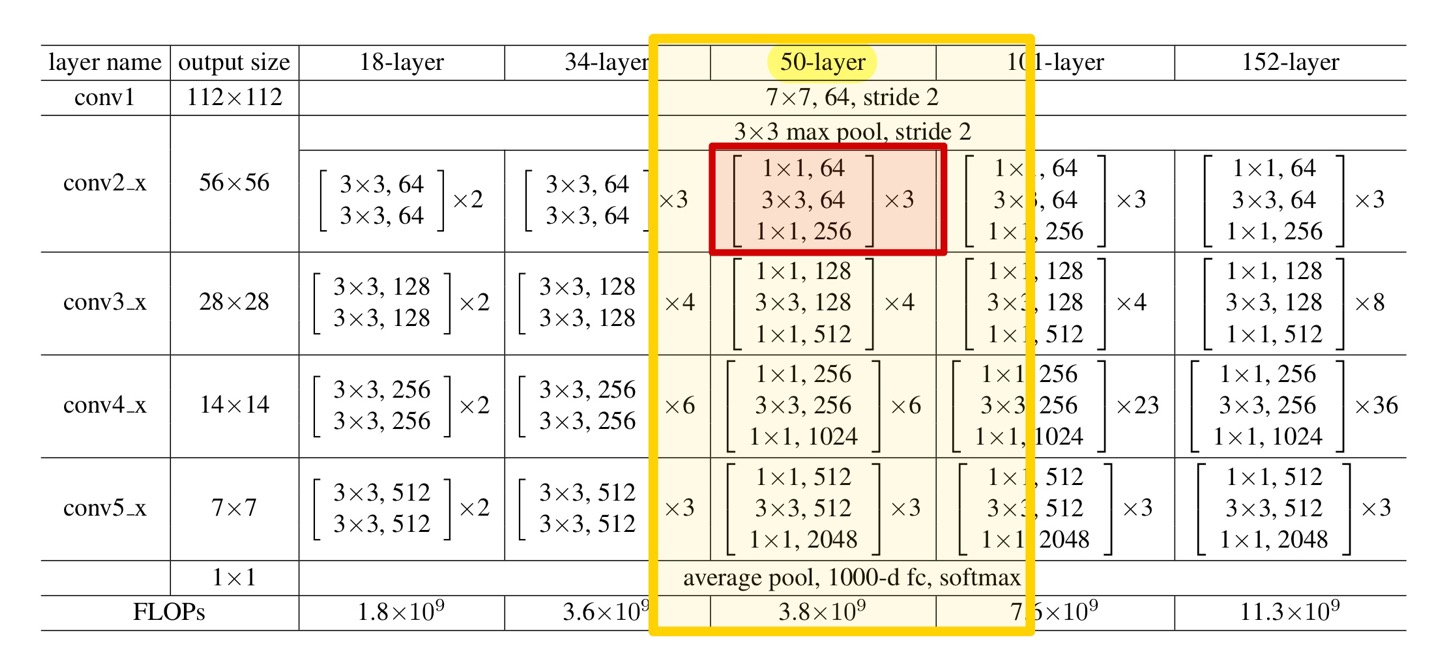

이후 본격적인 Convolution 연산을 진행한다.

Table에 나와 있는 값에 따라 1 x 1, 3 x 3, 1 x 1 kernel size의 연산을

각각 64, 64, 256개의 filter를 이용해 계산한다.

위와 같은 계산을 3번 반복한다.

여기서 한 가지, ResNet에서 기억하고 구현해야할 중요한 요소가 하나있다.

바로 shortcut connection이다.

output에 input값을 다시 더해 residual한 구조를 갖게 하는 요소로,

input x에 대해 Layer를 거쳐 F(x) +x 라는 결과가 나오도록 해야한다.

shortcut = x먼저, 본격적인 Convolution 연산에 앞서,

이전 Layer의 output이자 현재 Layer의 input이 될 x를 shortcut으로 두자.

# Conv2_x -> 3

for i in range(3) :

if i==0 :

x = layers.Conv2D(64, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(64, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# In case of i = 0 (for Dimension Identity)

# shortcut should enter as input with x

shortcut = layers.Conv2D(256, (1, 1), strides=1, padding='same')(shortcut)

shortcut = layers.BatchNormalization()(shortcut)

shortcut = layers.Activation('relu')(shortcut)

x = layers.Add()([x, shortcut])

shortcut = x # 56x56x256for문은 앞서 이야기했던 convolution 연산을 3번 반복하기 위한 장치로,

ResNet의 경우, 각 Layer마다 3, 4, 6, 3번 반복한다.

우선, i == 0인 경우, 즉, 처음으로 해당 Layer의 연산을 시작할 경우를 살펴보자.

input x에 대해 위 Table대로 Convolution, BN, Activation 연산을 거친 x는 최종적으로 F(x)라 하자.

이렇게 연산을 거친 output x이자 F(x)의 dimension은 기존 input x(=shortcut)과 달라졌으므로,

동일한 dimension으로 맞춰주는 연산이 필요하다.

따라서, input, 즉, shortcut에 output F(x)에 마지막으로 적용한 연산을 적용하여 dimension을 맞춰준다.

이렇게 shortcut(input)과 x(=F(x)/output)의 dimension이 맞춰졌다면,

input인 shortcut에 대해 Layer를 걸쳐 나온 F(x)라는 결과에

입력인 shorcut를 그대로 다시 더해준다. (F(x) + x( = shortcut))

그리고 이렇게 생성된 output x는 다시 다음 Layer의 input으로 전해지게 되고,

다음 Layer의 shorcut(=input)은 현재의 x값으로 초기화해준다.

# Conv2_x -> 3

for i in range(3) :

if i==0 :

...

else :

x = layers.Conv2D(64, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(64, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Add()([x, shortcut])

shortcut = x # 56x56x256이제 i == 0이 아닌 경우를 살펴보자.

해당 경우에도 사실 마찬가지이다.

단, 여기서는 shortcut의 dimension이 output인 F(x)(=x)의 dimension과 동일하므로,

위와 같이 shortcut의 dimension을 맞춰주는 연산이 불필요하다.

이 외에는 위 작성한 코드와 동일하다.

2번째 Convolution Layer에 대한 전체 코드는 다음과 같다.

x = layers.MaxPool2D((3, 3), 2, padding='same')(x) # 56x56x64

shortcut = x

# Conv2_x -> 3

for i in range(3) :

if i==0 :

x = layers.Conv2D(64, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(64, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# In case of i = 0 (for Dimension Identity)

# shortcut should enter as input with x

shortcut = layers.Conv2D(256, (1, 1), strides=1, padding='same')(shortcut)

shortcut = layers.BatchNormalization()(shortcut)

shortcut = layers.Activation('relu')(shortcut)

x = layers.Add()([x, shortcut])

shortcut = x # 56x56x256

else :

x = layers.Conv2D(64, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(64, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Add()([x, shortcut])

shortcut = x # 56x56x256

[ Conv 3 ]

세번째 Convolution Layer이다.

세번째 Convolution Layer부터 5번째 Convolution Layer까지는 사실 2번째 Layer에서 작성한 코드에서

Table에 있는 filter개수 및 kernel size, 반복횟수만 바꿔주면 된다.

위와 동일한 설명이므로 Convolution 3 ~5 Layer까지의 설명은 생략하겠다.

# Conv3_x -> 4

for i in range(4) :

if i==0 :

x = layers.Conv2D(128, (1, 1), strides=2, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(128, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(512, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# In case of i = 0 (for Dimension Identity)

# shortcut should enter as input with x, 112x112x64 -> 112x112x256

shortcut = layers.Conv2D(512, (1, 1), strides=2, padding='same')(shortcut)

shortcut = layers.BatchNormalization()(shortcut)

shortcut = layers.Activation('relu')(shortcut)

x = layers.Add()([x, shortcut])

shortcut = x # 28x28x512

else :

x = layers.Conv2D(128, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(128, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(512, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Add()([x, shortcut])

shortcut = x # 28x28x512

[ Conv 4 ]

# Conv4_x -> 6

for i in range(6) :

if i==0 :

x = layers.Conv2D(256, (1, 1), strides=2, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(1024, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# In case of i = 0 (for Dimension Identity)

# shortcut should enter as input with x, 112x112x64 -> 112x112x256

shortcut = layers.Conv2D(1024, (1, 1), strides=2, padding='same')(shortcut)

shortcut = layers.BatchNormalization()(shortcut)

shortcut = layers.Activation('relu')(shortcut)

x = layers.Add()([x, shortcut])

shortcut = x # 14x14x1024

else :

x = layers.Conv2D(256, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(1024, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Add()([x, shortcut])

shortcut = x # 14x14x1024

[ Conv 5 ]

# Conv5_x -> 3

for i in range(3) :

if i==0 :

x = layers.Conv2D(512, (1, 1), strides=2, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(512, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(2048, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# In case of i = 0 (for Dimension Identity)

# shortcut should enter as input with x, 112x112x64 -> 112x112x256

shortcut = layers.Conv2D(2048, (1, 1), strides=2, padding='same')(shortcut)

shortcut = layers.BatchNormalization()(shortcut)

shortcut = layers.Activation('relu')(shortcut)

x = layers.Add()([x, shortcut])

shortcut = x # 7x7x2048

else :

x = layers.Conv2D(512, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(512, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(2048, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Add()([x, shortcut])

shortcut = x # 7x7x2048

[ FC Layer ]

마지막으로 네트워크의 끝단 연산부분에 대해 살펴보자.

여기서는 average pooling, 1000-d fc, softmax를 수행한다.

단, 필자는 class가 2개이므로, 이 부분은 dataset에 맞게 변경이 필요하다.

# 2048 (same with AdaptiveAvgPool in Pytorch)

x = layers.GlobalAveragePooling2D()(x)

# classes = 2

x = layers.Dense(2, activation='softmax')(x)

return x

<Entire ResNet50 Model Code>

'''

< ResNet Architecture>

- ResNet "50"-layer

- 5_x Layer (1,3,4,6,3)

- skip connection

- Sequential model X

- Batch Normalization right after each convolution and before activation

'''

def ResNet(x):

# input = 224 x 224 x 3

# Conv1 -> 1

x = layers.Conv2D(64, (7, 7), strides=2, padding='same', input_shape=(224, 224, 3))(x) # 112x112x64

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.MaxPool2D((3, 3), 2, padding='same')(x) # 56x56x64

shortcut = x

# Conv2_x -> 3

for i in range(3) :

if i==0 :

x = layers.Conv2D(64, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(64, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# In case of i = 0 (for Dimension Identity)

# shortcut should enter as input with x

shortcut = layers.Conv2D(256, (1, 1), strides=1, padding='same')(shortcut)

shortcut = layers.BatchNormalization()(shortcut)

shortcut = layers.Activation('relu')(shortcut)

x = layers.Add()([x, shortcut])

shortcut = x # 56x56x256

else :

x = layers.Conv2D(64, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(64, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Add()([x, shortcut])

shortcut = x # 56x56x256

# Conv3_x -> 4

for i in range(4) :

if i==0 :

x = layers.Conv2D(128, (1, 1), strides=2, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(128, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(512, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# In case of i = 0 (for Dimension Identity)

# shortcut should enter as input with x, 112x112x64 -> 112x112x256

shortcut = layers.Conv2D(512, (1, 1), strides=2, padding='same')(shortcut)

shortcut = layers.BatchNormalization()(shortcut)

shortcut = layers.Activation('relu')(shortcut)

x = layers.Add()([x, shortcut])

shortcut = x # 28x28x512

else :

x = layers.Conv2D(128, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(128, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(512, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Add()([x, shortcut])

shortcut = x # 28x28x512

# Conv4_x -> 6

for i in range(6) :

if i==0 :

x = layers.Conv2D(256, (1, 1), strides=2, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(1024, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# In case of i = 0 (for Dimension Identity)

# shortcut should enter as input with x, 112x112x64 -> 112x112x256

shortcut = layers.Conv2D(1024, (1, 1), strides=2, padding='same')(shortcut)

shortcut = layers.BatchNormalization()(shortcut)

shortcut = layers.Activation('relu')(shortcut)

x = layers.Add()([x, shortcut])

shortcut = x # 14x14x1024

else :

x = layers.Conv2D(256, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(1024, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Add()([x, shortcut])

shortcut = x # 14x14x1024

# Conv5_x -> 3

for i in range(3) :

if i==0 :

x = layers.Conv2D(512, (1, 1), strides=2, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(512, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(2048, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# In case of i = 0 (for Dimension Identity)

# shortcut should enter as input with x, 112x112x64 -> 112x112x256

shortcut = layers.Conv2D(2048, (1, 1), strides=2, padding='same')(shortcut)

shortcut = layers.BatchNormalization()(shortcut)

shortcut = layers.Activation('relu')(shortcut)

x = layers.Add()([x, shortcut])

shortcut = x # 7x7x2048

else :

x = layers.Conv2D(512, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(512, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(2048, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Add()([x, shortcut])

shortcut = x # 7x7x2048

# 2048 (same with AdaptiveAvgPool in Pytorch)

x = layers.GlobalAveragePooling2D()(x)

# classes = 2

x = layers.Dense(2, activation='softmax')(x)

return x

<Entire Code>

import tensorflow as tf

from tensorflow.keras import layers

from tensorflow.keras.preprocessing.image import ImageDataGenerator

import os

import numpy as np

import cv2

import matplotlib.pyplot as plt

from tensorflow.python.client import device_lib

print(device_lib.list_local_devices())

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

tf.test.is_gpu_available()

gpus = tf.config.experimental.list_physical_devices('GPU')

if gpus:

try:

# Currently, memory growth needs to be the same across GPUs

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpus[0], True)

except RuntimeError as e:

# Memory growth must be set before GPUs have been initialized

print(e)

'''

< ResNet Architecture>

- ResNet "50"-layer

- 5_x Layer (1,3,4,6,3)

- skip connection

- Sequential model X

- Batch Normalization right after each convolution and before activation

'''

def ResNet(x):

# input = 224 x 224 x 3

# Conv1 -> 1

x = layers.Conv2D(64, (7, 7), strides=2, padding='same', input_shape=(224, 224, 3))(x) # 112x112x64

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.MaxPool2D((3, 3), 2, padding='same')(x) # 56x56x64

shortcut = x

# Conv2_x -> 3

for i in range(3) :

if i==0 :

x = layers.Conv2D(64, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(64, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# In case of i = 0 (for Dimension Identity)

# shortcut should enter as input with x

shortcut = layers.Conv2D(256, (1, 1), strides=1, padding='same')(shortcut)

shortcut = layers.BatchNormalization()(shortcut)

shortcut = layers.Activation('relu')(shortcut)

x = layers.Add()([x, shortcut])

shortcut = x # 56x56x256

else :

x = layers.Conv2D(64, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(64, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Add()([x, shortcut])

shortcut = x # 56x56x256

# Conv3_x -> 4

for i in range(4) :

if i==0 :

x = layers.Conv2D(128, (1, 1), strides=2, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(128, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(512, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# In case of i = 0 (for Dimension Identity)

# shortcut should enter as input with x, 112x112x64 -> 112x112x256

shortcut = layers.Conv2D(512, (1, 1), strides=2, padding='same')(shortcut)

shortcut = layers.BatchNormalization()(shortcut)

shortcut = layers.Activation('relu')(shortcut)

x = layers.Add()([x, shortcut])

shortcut = x # 28x28x512

else :

x = layers.Conv2D(128, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(128, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(512, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Add()([x, shortcut])

shortcut = x # 28x28x512

# Conv4_x -> 6

for i in range(6) :

if i==0 :

x = layers.Conv2D(256, (1, 1), strides=2, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(1024, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# In case of i = 0 (for Dimension Identity)

# shortcut should enter as input with x, 112x112x64 -> 112x112x256

shortcut = layers.Conv2D(1024, (1, 1), strides=2, padding='same')(shortcut)

shortcut = layers.BatchNormalization()(shortcut)

shortcut = layers.Activation('relu')(shortcut)

x = layers.Add()([x, shortcut])

shortcut = x # 14x14x1024

else :

x = layers.Conv2D(256, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(256, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(1024, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Add()([x, shortcut])

shortcut = x # 14x14x1024

# Conv5_x -> 3

for i in range(3) :

if i==0 :

x = layers.Conv2D(512, (1, 1), strides=2, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(512, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(2048, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# In case of i = 0 (for Dimension Identity)

# shortcut should enter as input with x, 112x112x64 -> 112x112x256

shortcut = layers.Conv2D(2048, (1, 1), strides=2, padding='same')(shortcut)

shortcut = layers.BatchNormalization()(shortcut)

shortcut = layers.Activation('relu')(shortcut)

x = layers.Add()([x, shortcut])

shortcut = x # 7x7x2048

else :

x = layers.Conv2D(512, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(512, (3, 3), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(2048, (1, 1), strides=1, padding='same')(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

x = layers.Add()([x, shortcut])

shortcut = x # 7x7x2048

# 2048 (same with AdaptiveAvgPool in Pytorch)

x = layers.GlobalAveragePooling2D()(x)

# classes = 2

x = layers.Dense(2, activation='softmax')(x)

return x

# Dataset (Kaggle Cat and Dog Dataset)

dataset_path = os.path.join('/home/kellybjs/Cat_Dog_Dataset')

train_dataset_path = dataset_path + '/train_set'

train_data_generator = ImageDataGenerator(rescale=1. / 255)

train_dataset = train_data_generator.flow_from_directory(train_dataset_path,

shuffle=True,

target_size=(224, 224),

batch_size=32,

class_mode='categorical')

valid_dataset_path = dataset_path + '/validation_set'

valid_data_generator = ImageDataGenerator(rescale=1. / 255)

valid_dataset = valid_data_generator.flow_from_directory(valid_dataset_path,

shuffle=True,

target_size=(224, 224),

batch_size=32,

class_mode='categorical')

input_shape = layers.Input(shape=(224, 224, 3), dtype='float32', name='input')

# Train

model = tf.keras.Model(input_shape, ResNet(input_shape))

model.compile(optimizer=tf.keras.optimizers.SGD(learning_rate=0.001, momentum=0.9),

loss='categorical_crossentropy',

metrics=['acc'])

model.summary()

train = model.fit_generator(train_dataset, epochs=50, validation_data=valid_dataset)

# Accuracy graph

plt.figure(1)

plt.plot(train.history['acc'])

plt.plot(train.history['val_acc'])

plt.title('Accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'validation'], loc='upper left')

plt.savefig('ResNet_Accuracy_1.png')

# Loss graph

plt.figure(0)

plt.plot(train.history['loss'])

plt.plot(train.history['val_loss'])

plt.title('Loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'validation'], loc='upper left')

plt.savefig('ResNet_Loss_1.png')

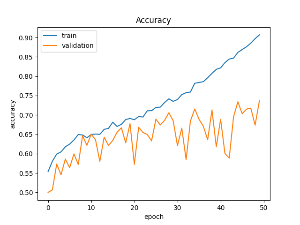

Result

위 코드를 적용한 Train 및 Validation Accuracy 결과이다.

Train 시에는 최대 약 90%, Validation 시에는 약 60~70%의 정확도가 나오는 것을 볼 수 있다.

Validation 정확도가 비교적 높지 않아, 파라미터 수정 및 재학습 예정이다.

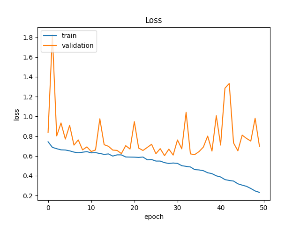

위 코드를 적용한 Train 및 Validation Loss 결과이다.

두 그래프 모두 점차 Loss 가 줄어드는 것이 보이나, Validation의 경우 후반에 많이 진동하는 점이 아쉽다.

이 또한 위 Accuracy 와 함께 보완해야할 점으로 보인다.